Artificial intelligence doesn’t cause much surprise nowadays, as it is gaining popularity and is pretty dynamic as well as voice assistants and their functions are getting more diverse.

What are voice recognition assistants able to do now?

We all remember that in 2011 one of the first voice assistants appeared. It was Siri, created by Apple. After that, Microsoft, Amazon and Google released their voice assistants either.

Now Siri, Google Assistant, Alexa will help you find the right information on the Internet, call or send a message, make a publication on the networks, launch the application or turn on the music. You can activate the voice assistant using special commands like (“Okay, Google”, “Hello,Siri”, “Alexa”).

In general, voice assistants have risen to a higher level and become smarter and more functional. And now their producers are trying to figure out which of these virtual assistants is smarter.

If we are talking about iOS, then Siri is still a faithful assistant.

Siri is your personal “smart” assistant which can cope with many different tasks. Siri is able to send messages, dial phone numbers, follow the calendar etc.

The easiest way to talk to Siri is by using “Hi, Siri” function. Then you tell what you need, for example: “Hi Siri, what time is it?” Next, Siri will have to determine which device you are using at the moment to check if Bluetooth is turned on.

There is a very convenient function for alerting calls. You can switch the function to notify all incoming calls, or only those calls that you receive when you are driving. It is aware of the news, weather forecast, directions. Siri can also recommend you what to watch on TV.

Users of Android devices can also download voice assistants to their smartphones. Let’s review the best voice recognition assistants for Android.

Google now, perhaps, is one of the most skilled voice assistants for Android.

It can analyze your mail and search history. It can run the notes and play music. This program is free, users who have Android devices do not need to download and install it. Google now can easily connect to other Google services.

The user, for example, can make a voice request in the Google Chrome browser or can dictate a note to write to Google Keep. You can also activate a timer using a voice command, find an event in the calendar, write a message to the subscriber whose number is already in your phone book, find the itinerary needed.

Alexa

Voice assistant Alexa, created by Amazon, can now talk not only about the weather or turn on its favourite radio station but also can add items to the basket, play music through Amazon Prime Music, answer various questions and make simple calculations.

An electronic assistant named Bixby, who has good intelligence, will soon become one of the most important elements of Samsung smartphones. As the developers of the assistant say, the user will be able to unlock the phone much faster to make a call, with a help of Bixby. It will be enough just to give the command to the voice assistant, and he will then do everything quickly.

Bixby is a voice service that allows a user interact with his mobile device fast and efficiently.

Using this voice assistant, you can also make various purchases and payments using voice commands.

As for the languages on which you can “communicate” with Bixby, there are 8 of them now. It is possible that in the near future the system will learn to recognize many more other languages.

Now we would like to share our experience how we managed to develop our own voice assistant.

To solve this problem, our team used the following solutions and techniques which are described below.

To cope with this issue we used:

- Programming language: Kotlin

- MVP

- RxKotlin, RxAndroid, RxPermissions

- Timber

- Kodein – for DI

- SpeechRecognitionView – for speech recognition animation.

All dependency you may find in a source code, link to which is at the end of the article.

Processing of screen switching

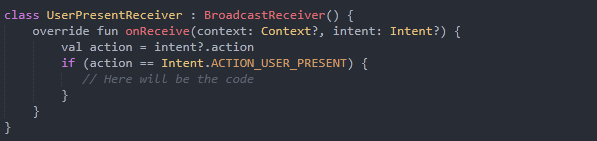

For the processing of the event when the user is present after device wakes up the following intent filter is being used:

![]()

The next step is getting the current event. We have to create BroadcastReceiver with the check to action:

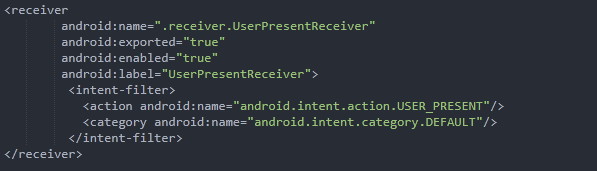

To make our receiver work, we register it in the manifest file the following way:

Now we intercept the screen-switching event. The next step is to recognize the command after turning screen on.

To start reading the speech, you need to grant RECORD_AUDIO permission. To do this, add the following line in the manifest:

![]()

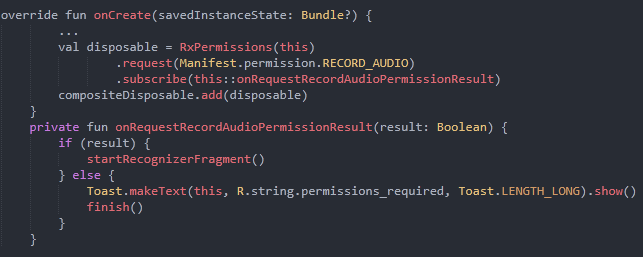

Also in the code Activity we add lines to check the resolution on RECORD_AUDIO.

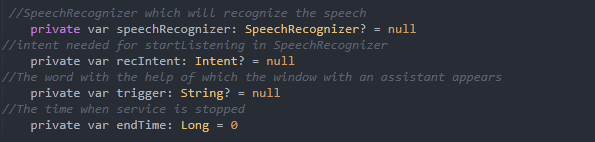

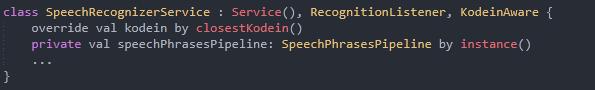

Creating Service which will read the speech for a given time interval and will use the word trigger to launch the app.

Speech recognition using SpeechRecognizer in Android must take place in main thread. We need to create sticky service that will work in main thread and include SpeechRecognizer in it.

The service will be inherited by Service and implement RecognitionListener.

![]()

The next step is registration the service in manifest:

![]()

Then we declare the following properties in the service:

To make it more convenient, we declare companion object in Service with needed constants and the method of creating Intent to launch the service

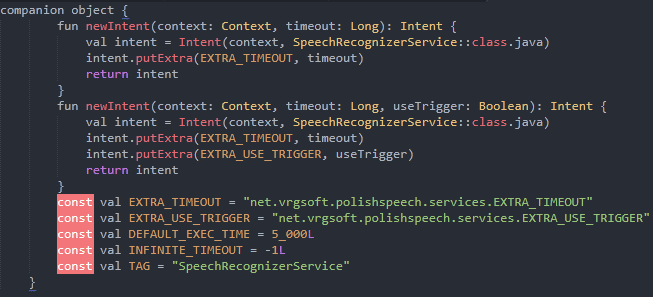

We add the launch of service when switching the screen in UserPresentReceiver

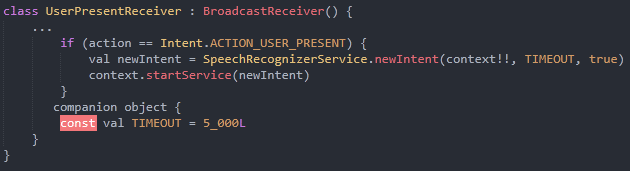

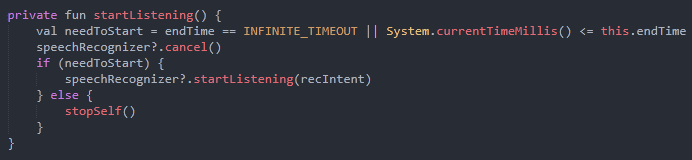

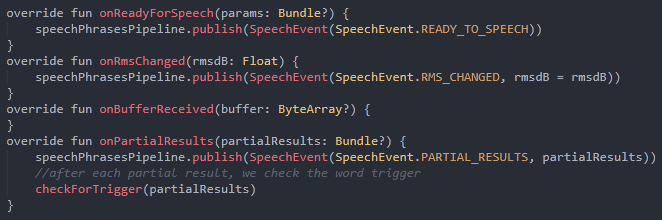

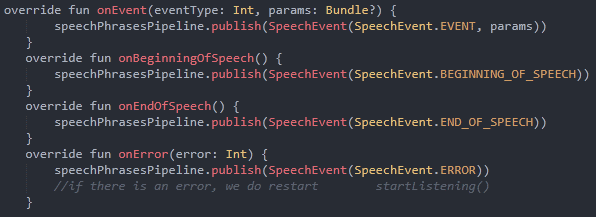

Then we have to override the following service methods:

If the execution time does not come to an end, we restart speechRecognizer

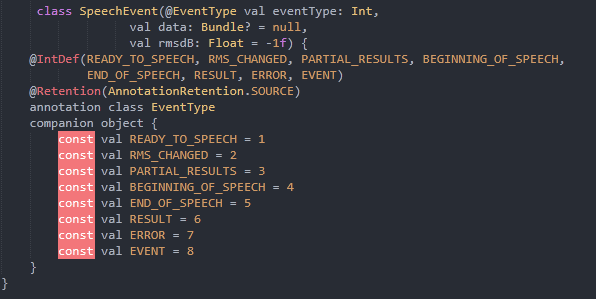

For more convenient work with events RecognitionListener, we will create a special pipeline:

At first we will develop a class that describes an event, which comes from RecognitionListener

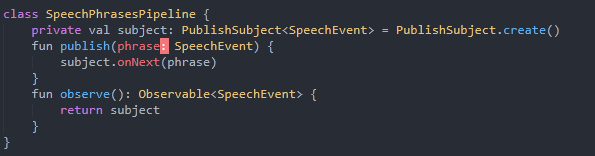

Now we create a class SpeechPhrasesPipeline, which contains PublishSubject.

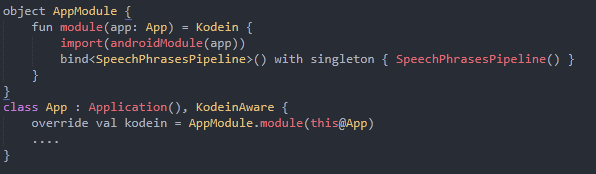

We create a singleton of a class SpeechPhrasesPipeline in Kodein module Application. With the help of a current singleton, the events SpeechEvent might be given to the subscribers in any point of the app.

With the help of Kodein we inject SpeechPhrasesPipeline into SpeechRecognizerService:

Now we need to add the realization of the methods RecognitionListener.

To display the tone we use SpeechRecognitionView. The example of work with the current View you may find in code of the app.

Proccesing of speech in Activities\Fragments

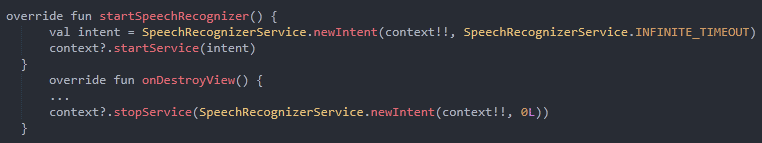

8.1) To launch and install the service from Activity\Fragments we use

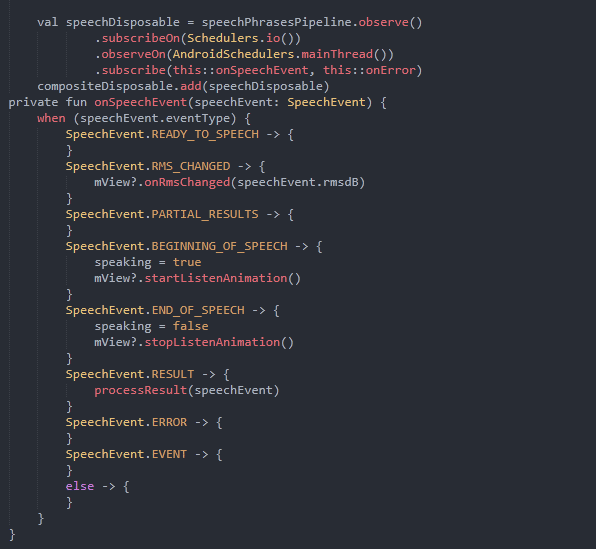

To get events from the service, we use singleton SpeechPhrasesPipeline

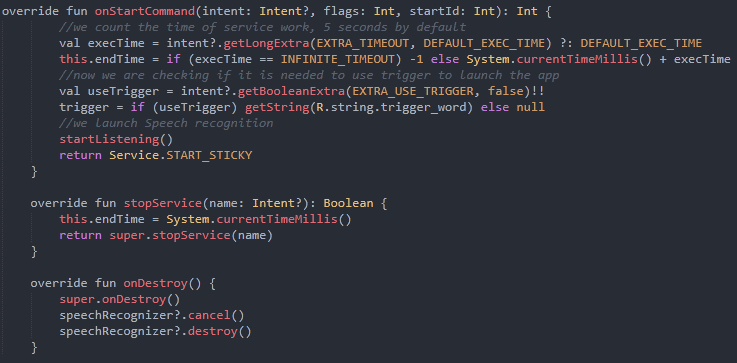

To get the results:

![]()

Real time reproduction

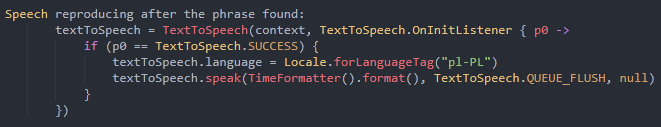

The last step is demonstration a date. To do this, after finding the keyword at the event SpeechEvent.RESULT, we call the following lines:

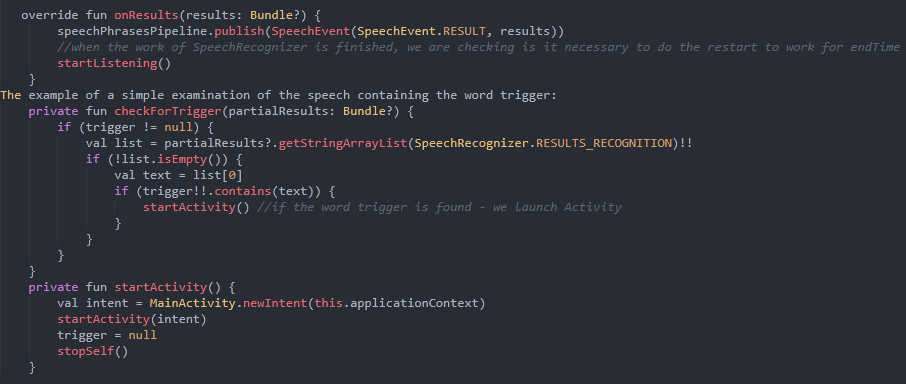

Completion of the application if the user is passive

The application finishes its work if the user is inactive for more than 5 seconds. For us, we need a property indicating the time of the last activity of the user:

![]()

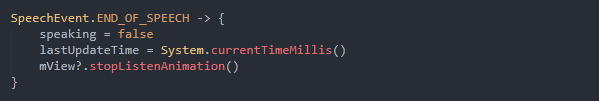

Then with each following event SpeechEvent.END_OF_SPEECH we update the time:

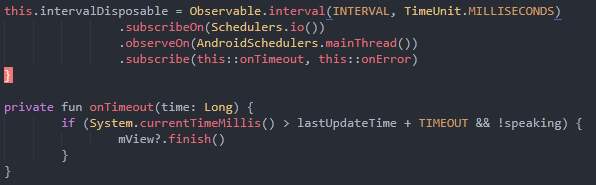

And finally, we add a timer using the Observable interval

This Observable will call onNext every second. If the user is inactive for more than 5 seconds, the application will be closed.

Conclusions

These examples are not working prototypes, they only demonstrate the capabilities of SpeechRecognizer and TextToSpeech on Android. For comfortable work we also used the technology of reactive programming using libraries such as RxKotlin and RxAndroid.